Welcome to the benchmark notebook for the Pose Bowl: Object Detection challenge!

If you are just getting started, first checkout the competition homepage and problem description.

Pose Bowl: Object Detection¶

In this challenge, you will help to develop new methods for conducting spacecraft inspections by identifying the position and orientation of a target spacecraft in an image.

The goal of this $12,000 challenge is to help NASA address key operational hurdles in spacecraft inspection, including the ability to detect a variety of different types of target spacecraft, as well as being able to run this code efficiently on relatively low-cost hardware. For the latter reason, this challenge is a code execution challenge, and your submission will be run on our code execution platform in an environment that simulates a small, off-the-shelf computer board used on R5 NASA spacecraft.

If you're not familiar with code execution challenges, don't worry! This benchmark notebook/blog post will help you get comfortable with the setup and on your way to producing your own challenge submissions.

We'll cover two main areas in this post:

Section 1. Getting started: An introduction to the challenge data, including image examples and some explanation about the type of variation to expect across the dataset.

Section 2. Demo submission: A demonstration of how to run the benchmark example and produce a valid code submission.

Section 1: Getting started¶

Get this notebook¶

First, clone the runtime repository with:

git clone https://github.com/drivendataorg/spacecraft-pose-object-detection-runtime.gitThe repository includes a copy of this notebook in /notebooks/data-exploration-and-benchmark.ipynb. We'd encourage you to run this notebook yourself as part of this exercise.

You will also need to set up your environment. For the purposes of this notebook, you will just need to the following libraries, which you can install with the following pip command.

pip install jupyterlab pandas pyarrow opencv-python matplotlib ultralyticsDownload some data¶

Let's first download some challenge imagery from the Data Download page. The imagery dataset comes in the form of .tar files, each of which is about 2.5 GB. For this benchmark example, we'll just download the 0.tar file.

Downloading imagery may take some time, so feel free to do something else for a little while. (The code submission format page and this runtime repository's README are relevant background reading for what we're about to do next.)

Once the tar file has been downloaded, you can extract it to the data_dev directory (the command below should work on a Unix-based system). We suggest saving the images to the data_dev directory (or something similarly named) rather than the data directory because the data directory plays a special role in simulating the test data that is available when your submission runs in the code execution environment.

tar -xzvf 0.tar -C data_dev/You'll also want to download the submission_format.csv, train_labels.csv and train_metadata.csv from the Data Download page and save these in the same data_dev directory.

Once everything is downloaded, your data_dev directory should look like this.

spacecraft-pose-object-detection-runtime/

└── data_dev

├── images

│ ├── 0001954c9f4a58f7ac05358b3cda8d20.png

│ ├── 00054819240f9d46378288b215dbcd3a.png

│ ├── 000dbf763348037b46558bbcb6a032ac.png

│ ...

│

├── submission_format.csv

├── train_labels.csv

└── train_metadata.csvExplore the data¶

Now it's time to get acquainted with the challenge data. Below we define locations for some of the important files.

from pathlib import Path

import numpy as np

import pandas as pd

PROJ_DIRECTORY = Path.cwd().parent

DATA_DIRECTORY = PROJ_DIRECTORY / "data"

DEV_DIRECTORY = PROJ_DIRECTORY / "data_dev"

IMAGES_DIRECTORY = DEV_DIRECTORY / "images"

Let's take a look at two of the metadata files.

train_meta = pd.read_csv(DEV_DIRECTORY / "train_metadata.csv", index_col="image_id")

train_labels = pd.read_csv(DEV_DIRECTORY / "train_labels.csv", index_col="image_id")

The train_metadata.csv contains information about the type of spacecraft and background used to generate the image. You should consider ways to use this information effectively in stratifying your train and test splits. The test data that you will be evaluated on includes spacecraft types that are not included in the training set, so you will want to consider strategies for ensuring your model generalizes well. You may also want to consider ways of generating additional training data, e.g. to generate a more diverse variety of background imagery.

The train_metadata.csv contains information about all images in the training set. But since we haven't downloaded all the images yet, let's filter the dataset down to just the images we've saved locally.

# we didn't download the full training set, so add a column indicating which images are saved locally

train_meta["exists"] = train_meta.index.to_series().map(lambda x: (IMAGES_DIRECTORY / f"{x}.png").exists())

# filter our metadata down to only the images we have locally

train_meta = train_meta[train_meta.exists]

train_meta.head()

The train_labels.csv contains the bounding box information for the target spacecraft in each image.

train_labels.head()

Let's look at a few example images to get a feel for what's in this dataset.

import cv2

import matplotlib.pyplot as plt

from matplotlib.patches import Rectangle

def get_bbox(image_id, labels):

"""Get bbox coordinates as list from dataframe for given image id."""

return labels.loc[image_id].loc[["xmin", "ymin", "xmax", "ymax"]].values.tolist()

def display_image(image_id, images_dir=IMAGES_DIRECTORY, show_bbox=False, labels=train_labels):

"""Display image given image ID. Annotate with bounding box if `show_bbox` is True."""

img = cv2.imread(str(images_dir / f"{image_id}.png"))

fig, ax = plt.subplots()

# cv2 reads images as BGR order; we should flip them to RGB for matplotlib

# ref: https://stackoverflow.com/questions/54959387/rgb-image-display-in-matplotlib-plt-imshow-returns-a-blue-image

ax.imshow(np.flip(img, axis=-1))

if show_bbox:

xmin, ymin, xmax, ymax = get_bbox(image_id, labels)

patch = Rectangle((xmin, ymin), xmax - xmin, ymax - ymin, fill=False, edgecolor='white', linewidth=1)

ax.add_patch(patch)

Some images are relatively straightforward with an easily identifiable spacecraft. Below is one example. Note that the spacecraft is not necessarily fully in view. Also note that the background in this case consists entirely of our planet, with no view of outer space, while in other images it may be entirely space, or more commonly a mix of planet and space.

display_image("07dd7b5a0b7b224abc0a7fea8e78de76", show_bbox=True)

There are many examples where the spacecraft will be more difficult to detect.

One challenging type of image involves target spacecraft that appear very small, due to their distance from the chaser spacecraft. Here is one example of that situation.

display_image("0d4b4eda4bf7c0251399047d71cc4188", show_bbox=True)

Lens flare and other visual artifacts from the refraction of light on the camera lens may also complicate your detection algorithm, as in the example below.

display_image("01fcd95cdcf8bb84ec4dfa7b87bf2abc", show_bbox=True)

Some target spacecraft may have long, thin appendages that are difficult or even impossible to see. Remember that your bounding box needs to encompass these appendages.

display_image("0263257179fcf14793f40430713ece44", show_bbox=True)

Finally, there will be situations where parts of the spacecraft are obscured by shadow. This can make it particularly difficult to detect the edges of thin appendages like the ones we saw above.

display_image("0eee2fcf59ec1e7641db1f8284c1d935", show_bbox=True)

Explore for yourself¶

The code below will pull and display a random image. Feel free to run this as many times as you like to develop your intuition for what types of images are in this dataset.

image_id = train_meta.sample(1).index[0]

print(image_id)

ax = display_image(image_id, show_bbox=True)

Section 2: Demo submission¶

Now that we've had a chance to get a feel for the data, it's time to walk through the steps for creating a competition submission.

This is a code submission competition, so our focus for now will be on creating that submission in the correct format, and less so on the accuracy of the predictions. To get you started, we'll be using a YOLO model to try to identify the target spacecraft in 100 local test images.

If you haven't already read through the following resources, now would be a great time to do that:

- Code Submission Format page: An introduction to the code submission setup and how to make a valid submission.

- Runtime Repository README: Details on the competition runtime and how to use this repository effectively.

Using the /data directory¶

The /data directory in this runtime repository plays two important roles:

- When you are testing your solution locally, the contents of

/datawill be mounted on the Docker container that simulates our code execution platform (further below, we'll walk through the specifics of how to do these test runs) - When you make a submission on the DrivenData platform, the contents of

/datathat get mounted to the Docker container will be the unseen test data that ultimately determines your rank on the leaderboard.

To develop your submission locally, you should add a subset of the challenge data to /data and treat this as your local test set. We'll demonstrate one way to do that next...

First, let's select a random subset of 100 images in /data_dev to treat as our local test set.

test_images = train_meta.sample(100, random_state=1).index.tolist()

test_images[:5]

Next, we'll move these files into the /data directory.

import shutil

dest_dir = DATA_DIRECTORY / "images"

if not dest_dir.exists():

dest_dir.mkdir(parents=True, exist_ok=True)

for img in test_images:

shutil.copy2(IMAGES_DIRECTORY / f"{img}.png", dest_dir / f"{img}.png")

Next we'll add a "submission format" CSV file to /data.

Note: A submission format file in this case is simply a CSV file that has the correct column and row indices required for a valid submission. Using this type of standard file is a useful way to ensure that all participants understand how their submission needs to be formatted in order to be accepted, and we tend to use these in most of our challenges.

A submission format file for the full training set should already be present in /data_dev. We'll make a copy of this that only includes rows pertaining to the images in our local test set, and save it in /data.

submission_format = pd.read_csv(DEV_DIRECTORY / "submission_format.csv", index_col="image_id")

submission_format_val = submission_format.loc[test_images]

submission_format_val.to_csv(DATA_DIRECTORY / "submission_format.csv")

Let's also create a version of the labels file that only includes rows pertaining to our local test set. We can use this later for local scoring (see below).

train_labels = pd.read_csv(DEV_DIRECTORY / "train_labels.csv", index_col="image_id")

test_labels = train_labels.loc[test_images]

test_labels.to_csv(DATA_DIRECTORY / "test_labels.csv")

Download a pretrained model¶

For this benchmark example, we will rely on YOLO, a commonly used algorithm for object detection. This will suit our purposes for demonstrating a very basic baseline approach, but you should explore more sophisticated methods yourself.

To download the latest v8 YOLO model, we'll use the ultralytics library. The code below will download the model and move it into the example_benchmark directory, where we're assembling the components of our submission.

from ultralytics import YOLO

model = YOLO("yolov8n.pt")

shutil.move('yolov8n.pt', PROJ_DIRECTORY / "example_benchmark" / "yolov8n.pt")

Review the benchmark submission scripts¶

Now let's take a look at the 2 files in /example_benchmark. This is the directory we are going to convert into a submission.zip file, which you can submit for the challenge.

- The

main.shshell script is a required file for any submission to this challenge. Our code execution platform will run this script, and you can have it call other scripts and resources as needed for your submission. - The

main.pyPython script is called bymain.shin this benchmark example, and this is where the work of generating predictions actually happens. There is no requirement that you also use Python here, but that's the approach we've taken since it is such a common one. Themain.pyscript will iterate through all the images in thesubmission_format.csvand generate predictions using YOLO. If YOLO doesn't return a prediction, we simply generate a bounding box for the center of the image.

The example_benchmark directory should now contain the following files. Note that we do need to include the yolov8n.pt file because the submission will have no internet access when running on our platform.

spacecraft-pose-object-detection-runtime/

└── example_benchmark/

├── main.py

├── main.sh

└── yolov8n.ptmake pull¶

To ensure that all participants are using the same runtime environment, we have a publicly accessible docker image hosted on Azure Container Registry.

The make pull command pulls the official version of the docker image and stores it locally. Having a local version of the competition image allows you to test your submission using the same image that is used during code execution.

Note: This command can take a little while to run the first time you pull the image. But after that it will be relatively quick since you'll have all the layers cached locally. You don't need to pull the image again each time you test your submission, unless the image has changed.

!cd {PROJ_DIRECTORY} && make pull

You should now have a local copy of the docker image, which you can verify by running:

!docker images | grep spacecraft-pose-object-detection

make pack-benchmark¶

This command simply goes to your example_benchmark directory, zips the contents, and writes the zip archive to submission/submission.zip.

!cd {PROJ_DIRECTORY} && make pack-benchmark

Note: The

make pack-benchmarkcommand will check to see if you already have asubmission/submission.zipand error if you do, so as not to overwrite existing work. If you already have this file, you'll need to manually remove it before running the command.

After running the above command, we should now have a new submission/submission.zip.

spacecraft-pose-object-detection-runtime/

├── benchmark_src/

│ ├── main.py

│ ├── main.sh

│ └── yolov8n.pt

└── submission/

└── submission.zip <---- new file, this is what gets submitted on platformThis is the file that we will eventually upload to the competition platform for code execution. But before doing that, we want to test it locally.

make test-submission¶

This command simulates what happens during actual code execution, launching an instance of the official Docker image and running the same inference process that runs on the competition platform. The required host directories are mounted on the container, and the entrypoint script main.sh is executed. Note that when testing locally the contents of your local data/ directory will be mounted on the container, whereas when your submission is running on our platform, the unseen test set will be mounted as data/.

!cd {PROJ_DIRECTORY} && make test-submission

Once the test run has completed, we should now have a new file with our predictions at submission/submission.csv.

spacecraft-pose-object-detection-runtime/

├── benchmark_src/

│ ├── main.py

│ ├── main.sh

│ └── yolov8n.pt

└── submission/

├── submission.zip <---- this is what gets submitted on platform

└── submission.csv <---- new file, predictions on test setWe also provide a scoring script that computes your score using the same calculation that's used for the public leaderboard. You can generate a score for your local testing with a command like the one below. Remember that this score will be computed on your local test set, and your score on the public leaderboard will be based on an unseen test set.

python scripts/score.py submission/submission.csv data/test_labels.csvSubmitting to the platform¶

We're almost done. Assuming that our test run completed and the submission.csv looks correct, it's time to submit the code on the platform.

- Go to the competition submissions page and upload your

submission/submission.zip. - Please be patient while your submission is uploaded and executed. Your job may be queued if other jobs are still pending.

- You can track the status of your submission on the Code Execution Status page. Logs will become available once the submission begins processing. To see them click on "View Log".

Once your submission has been successfully uploaded, you will see something like this on the Code Execution Status page:

Please be patient while your code is running. You may want to follow the links to check the logs for your job, which are live updated as your code job progresses.

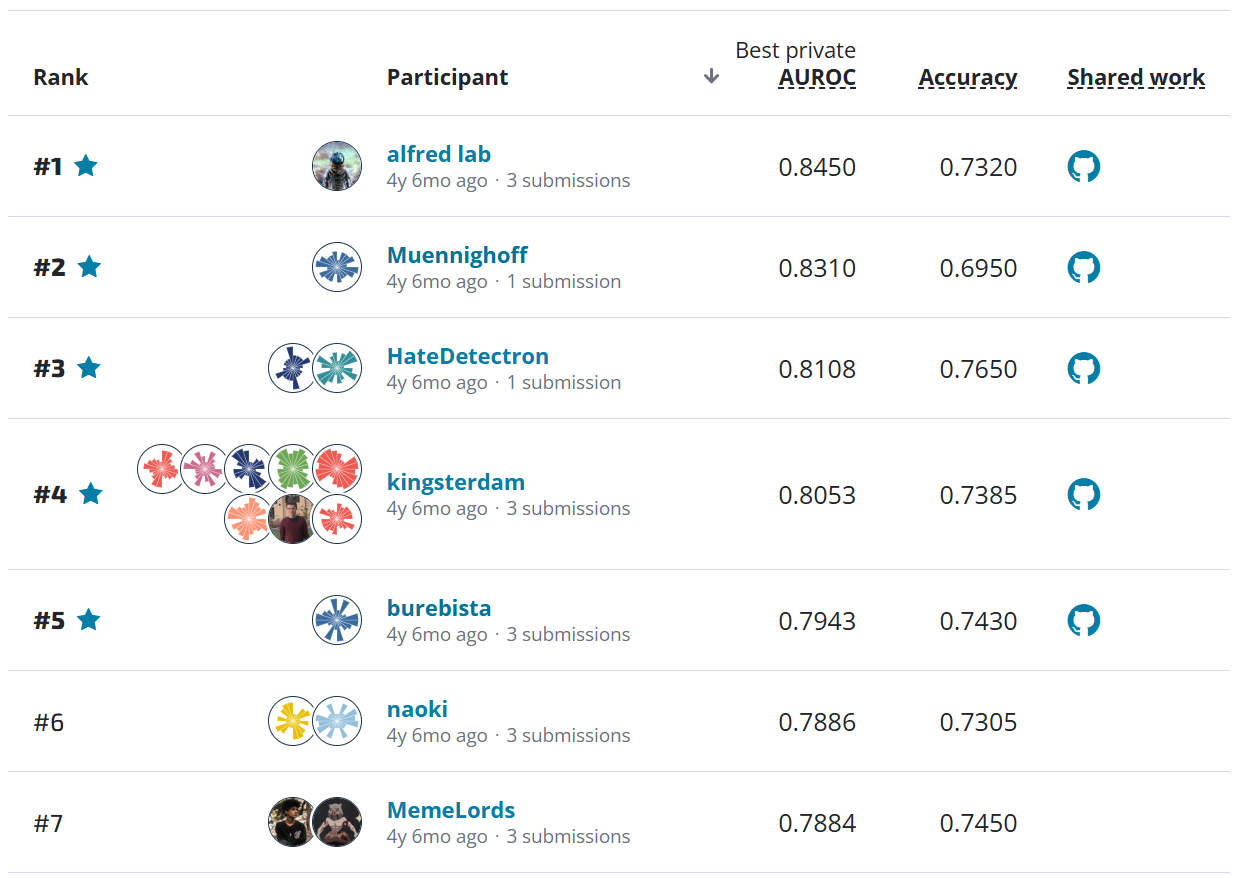

Once your job has completed, head over to the Submissions page where you should be able to see your score. It will look something like this, except that we're sure you can do better than the benchmark!

That's it! You're on your way to creating your own code submission!

Head over to the competition homepage to get started. And have fun! We can't wait to see what you build!

Images courtesy of NASA.