by

Robert Gibboni

The Challenge¶

Motivation¶

Much of the world's healthcare data is stored in free-text documents, usually clinical notes taken by doctors. This unstructured data can be challenging to analyze and extract meaningful insights from. However, by applying a standardized terminology like SNOMED CT, healthcare organizations can convert this free-text data into a structured format that can be readily analyzed by computers, in turn stimulating the development of new medicines, treatment pathways, and better patient outcomes.

One way to analyze clinical notes is to identify and label the portions of each note that correspond to specific medical concepts. This process is called entity linking because it involves identifying candidate spans in the unstructured text (the entities) and linking them to a particular concept in a knowledge base of medical terminology. Entity linking medical notes is no small feat; notes are often rife with abbreviations and assumed knowledge, and the knowledge base itself can include hundreds of thousands of medical concepts.

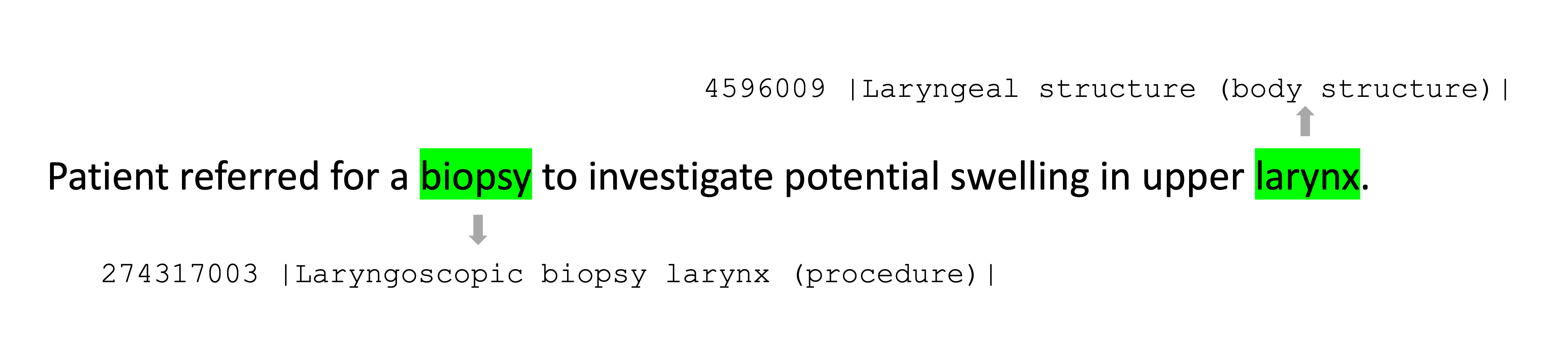

The objective of the SNOMED CT Entity Linking Challenge was to link spans of text in clinical notes with specific topics in the SNOMED CT clinical terminology. Below is a synthetic example that illustrates the basic structure of the task.

A synthetic example of medical text and annotations. The text indicating the concepts are highlighted in green, and the indicated concept IDs, names, and categories are shown.

Participants built systems based on de-identified real-world doctor's notes that were annotated with SNOMED CT concepts by medically trained professionals. This is the largest publicly available dataset of labelled clinical notes, and the challenge participants were among the first to use it!

Results¶

At the start of the competition, DrivenData released a benchmark solution developed by our partners at Veratai. Approach of the benchmark was to separate entity linking into two stages: first, employ a fine-tuned BERT-based model to detect spans of text that are likely to be medical concepts, then link those extracted spans to SNOMED CT concepts. The competition metric was based on the character intersection-over-union (IoU) of predicted and actual spans, macro-averaged by class to weight all classes equally, regardless of the frequency of each class in the dataset. The benchmark achieved a score of 0.1794 by that metric; by the close of the competition, 25 participants had surpassed the benchmark score! Over the course of competition, we received more than 350 submissions from participants and teams around the world.

Overall results of benchmark and winning solutions in terms of the competition metric, class macro-averaged character intersection-over-union (IoU).

The winning teams drew on a diverse set of approaches to data, algorithms, and everything in between. Some approaches included curating a large set of open-source medical synonyms and abbreviations (nearly one million!), incorporation of simple linguistic rules, low-rank approximation (LoRA) fine-tuning state-of-the-art large language models (LLMs), prompt engineering, and retrieval augmented generation (RAG) to enrich the LLM prompt context with relevant documents. You can find more information on each of the winning approaches below.

The ongoing importance of this work was highlighted by the organizers at SNOMED International:

SNOMED International thanks all the organizations that worked with us on this initiative and all of those who entered and completed the challenge.

The better we understand how SNOMED CT can be used to analyze and extract meaning from clinical notes, the greater impact it will have. And rather than being just an esoteric exercise, this competition will result in the development of tools and techniques that can be shared within the SNOMED CT and broader health standards and research communities and used to unlock and supercharge the clinical data currently captured in free text. That is how we demonstrate the deep capabilities of SNOMED CT, whether to support the interoperability of patient records or to mine clinical data for a multitude of research purposes.

Don Sweete, CEO, SNOMED International

Let's get to know our winners and how they excelled at deciphering the mysterious language of clinical notes! You can also dive into their open source solutions in the competition winners repo on GitHub.

Meet the winners¶

Guy Amit, Yonatan Bilu, Irena Girshovitz & Chen Yanover (KIRIs)¶

|

|

|

|

Place: 1st Place

Prize: $12,500

Hometown: Kfar Malal, Israel

Usernames: guyamit, YonatanBilu, IrenaG & cyanover

Background

We are a team of researchers from the KI research institute in Israel, a non profit promoting applied research in computational health. Guy, Yonatan and Chen received their PhD in computer science some 20 years ago, while Irena is catching up to them these days. Before joining the KI research institute Guy, Yonatan and Chen worked in the Haifa IBM Research lab on machine-learning projects; Guy on medical imaging, Yonatan on computational argumentation and Chen on analysis of electronic health records. Irena used to work for Medial Research, a company developing machine-learning solutions for early detection of disease.

What motivated you to compete in this challenge?

We have always been interested in leveraging NLP tools for benefit in the medical domain. This became especially attractive with recent advancements, and in particular the rapid improvements in LLMs. We hoped that by participating in the challenge we will become familiar with the current tools, and be able to evaluate their usefulness.

Summary of Approach

Initially we aimed to develop an LLM-based solution, but as a baseline for comparison we also tried a simple dictionary-based approach. We proceeded in parallel, but fairly early on it seemed that in the context of this challenge, with the available annotated data, the dictionary approach was more promising. This shifted our focus to an iterative process of error analysis and improving the dictionary construction algorithm.

The solution is mostly based on a dictionary that maps pairs of (section header, mention) to a SNOMED CT concept ID. In the offline training phase the dictionary is constructed from two sources – the training data and the SNOMED CT concept names (augmented with OMOP-based synonyms). The dictionary is then expanded by some simple linguistic rules, and by permuting multiword expression. More precisely, two dictionaries are constructed – one which is not case sensitive, and one which is. In the inference phase each document is processed independently of the others. It is broken into sections, and in each section the relevant mentions are matched with the text. Successful matches are annotated with the corresponding concept ID. This is done with both dictionaries. Overlaps are then removed by preferring longer mentions, and section-specific keys to general ones. Finally, in a post processing phase mentions may be expanded and annotations made more specific based on SNOMED CT relations.

Check out the team's full write-up and solution in the competition repo.

Mikhail Kulyabin, Gleb Sokolov & Aleksandr Galaida (SNOBERT)¶

Place: 2nd Place

Prize: $7,500

Hometown: Erlangen, Germany and Yerevan, Armenia

Usernames: MikhailK, bproduct & dogwork

Background

Mikhail Kulyabin received an Engineer's degree in mechanical engineering from Bauman Moscow State Technical University (BMSTU) and a M.Sc. degree in computational engineering from Friedrich-Alexander-Universität Erlangen-Nürnberg (FAU), where he is currently pursuing the Ph.D. in computer science. His main research interest lies in applying AI to topics related to ophthalmology.

Gleb Sokolov is an experienced deep learning engineer with a strong background in computer vision. He is a Kaggle grandmaster.

Aleksandr Galaida received a Bachelor's degree in Computer Science from Moscow Institute of Physics and Technology State University (MIPT). He is an experienced computer vision engineer specializing in R&D tasks, having worked for more than five years in the scientific laboratory and product teams.

What motivated you to compete in this challenge?

Interest in a new area for us, gaining skill in working with language models.

Summary of Approach

The solution consists of two stages: - First stage: train NER segmentation task with four classes ('find', 'proc', 'body', 'none'); - Second stage: for each span from the first stage, predict its SNOMED ID.

Check out the team's full write-up and solution in the competition repo.

Vincenzo Della Mea, Mihai Horia Popescu & Kevin Roitero (MITEL-UNIUD)¶

|

|

|

Place: 3rd Place

Prize: $5,000

Hometown: Udine, Italy

Usernames: vdellamea, mihaihoria.popescu & kevinr

Background

MITEL (Medical Informatics, Telemedicine & eHealth Lab) is an academic laboratory within the Department of Mathematics, Computer Science, and Physics at the University of Udine, Italy, mainly specialized in two research areas: digital pathology, and biomedical classifications and ontologies. Regarding the latter, MITEL from one side contributes to the development of WHO classifications and their tools, from the other autonomously researches methods for clinical document coding and interpretation with NLP techniques. The participation in the present challenge fits well in the latter research area, in particular because it regards the perhaps most prominent clinical terminology currently available. Mihai Horia Popescu is PhD candidate in Computer Science and Artificial Intelligence; Kevin Roitero is a tenure track Assistant Professor; Vincenzo Della Mea is Associate Professor and head of the Lab.

What motivated you to compete in this challenge?

SNOMED-CT and the International Classification of Diseases (ICD) are pivotal in clinical terminology and classification. Leveraging our experience with ICD automated coding, we aim to focus on SNOMED CT by engaging in this competition. The provided data will significantly aid our research in automated clinical document coding and annotation, aligning with the interests of the Challenge sponsors and demonstrating the primary application of SNOMED CT.

Summary of Approach

Our approach to the challenge involves two primary tasks: first, an entity recognition task aided by a Large Language Model (LLM), which involves annotating terms within the input text by means of a specific prompt tailored on annotating clinical entities. Secondly, we implement classification in two stages: an initial document retrieval task carried out using the vector database Faiss, followed by a classification task performed leveraging an LLM, again with a specific prompt.

Check out the team's full write-up and solution in the competition repo.

Thanks to all the participants and to our winners! Special thanks to the SNOMED International, Veratai, and PhysioNet for enabling this important and interesting challenge and for providing the data to make it possible!

LABS

LABS