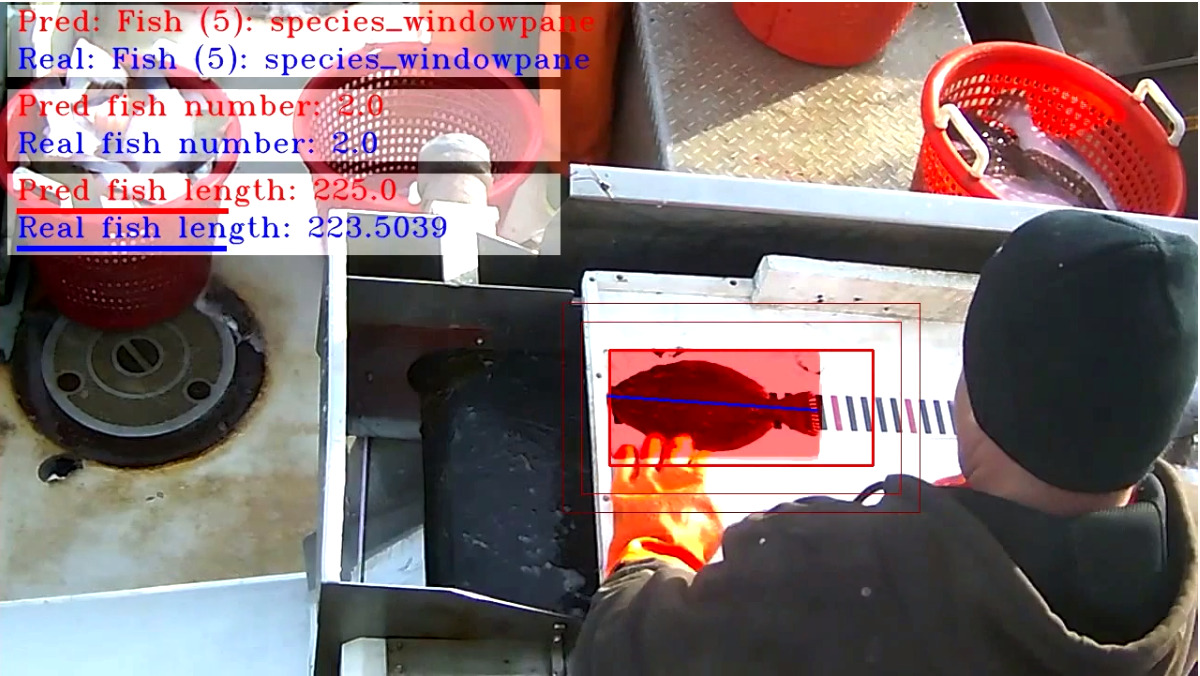

A video of machine learning in action by 2nd Place winner ZFTurbo

Deep learning for sustainable fisheries¶

Meet the winners of the N+1 Fish, N+2 Fish challenge!¶

Every day, boats small and large leave from docks along the New England coast in search of fish. Today’s fishermen work hard to catch enough to make a living, while leaving enough fish for the next season.

The US is considered a global leader in fisheries management because of its strong commitments to keeping fish populations at healthy levels. This puts additional requirements on fishing boats to demonstrate they’re meeting those standards. Electronic monitoring systems, like video cameras, could offer an affordable way for fishermen to show their work and keep consumers and fisheries managers confident in the sustainability of their seafood.

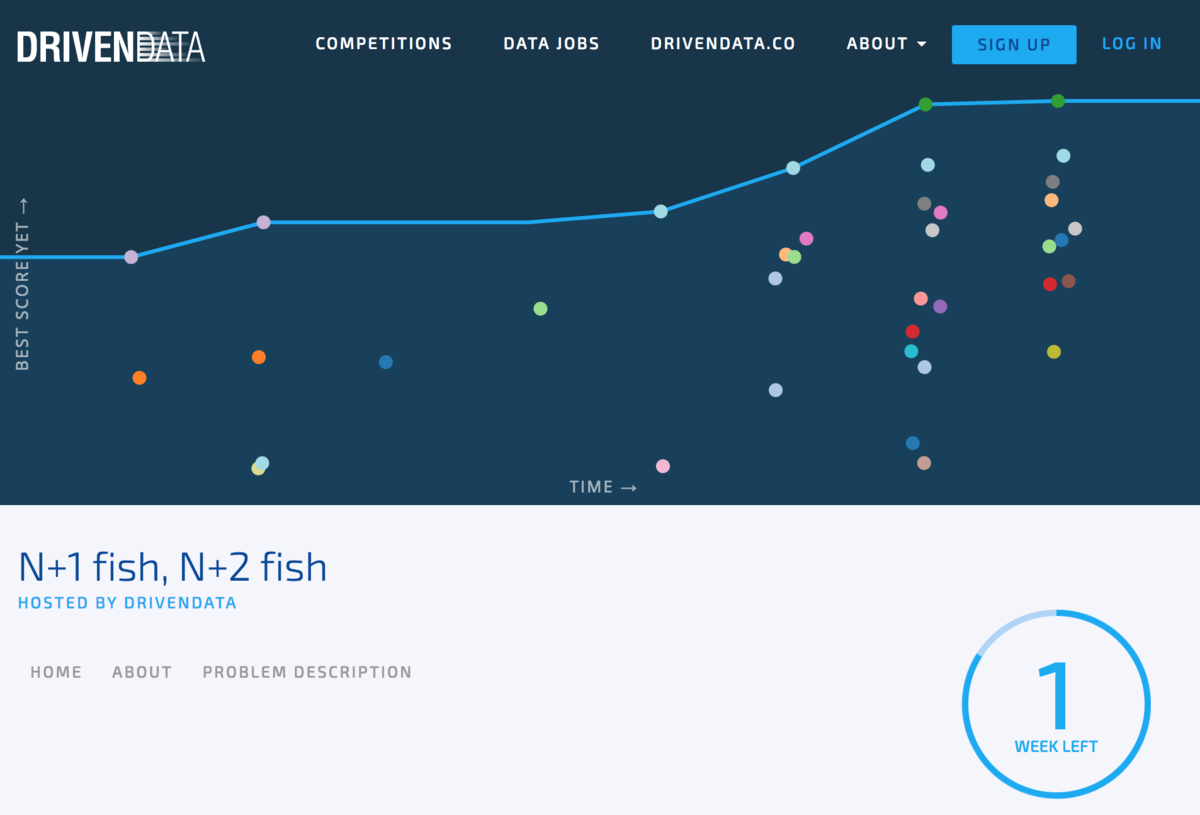

In this competition, data scientists from around the world built algorithms to count, measure, and identify the species of fish from on-board footage. Better machine learning tools have the potential to save a huge amount of time sifting through videos while lowering the safety risk and added cost from having an auditor on board.

The N+1 Fish, N+2 Fish competition was the first to use actual fishing video to develop tools that can classify multiple parameters, such as size and species. With more and more fisheries adopting video camera monitoring, the results of this competition have real-world impacts for ocean conservation and fishing communities alike.

We saw amazing progress as submissions came in over the course of this competition, pushing forward the boundary of what these algorithms could achieve. Meet the winners below and hear how they managed to outswim the rest of the field!

Meet the winners¶

Dmytro Poplavskiy¶

Place: 1st

Prize: $20,000

Hometown: Brisbane, Australia

Username: dmytro

Background: I’m experienced software engineer with the recent interest in machine learning. I started participating in machine learning competitions around a year ago and managed to finish within the top 5 places in four competitions I participated so far.

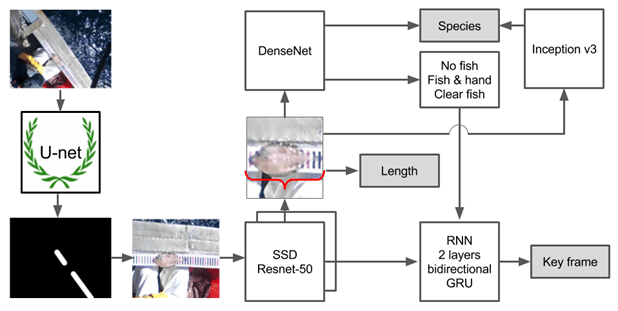

Summary of approach:

- Find position and orientation of the ruler using UNET

- Crop frames so ruler is always horizontal in the center of frame

- Detect fish using SSD network

- Crop found fish if any and use the separate classification network to find the species and if fish is partially covered

- Find frames when unique fish is clearly visibly with RNN, using results of detection and classification networks

- Use width of the SSD found box with, converted back to original frame coordinates as fish size

Check out dmytro’s full write-up and solution in the competition repo.

Roman Solovyev¶

Place: 2nd

Prize: $15,000

Hometown: Moscow, Russia

Username: ZFTurbo

Background: I’m Ph.D in microelectronics field. Currently I’m working in Institute of Designing Problems in Microelectronics (part of Russian Academy of Sciences) as Leading Researcher. I often take part in machine learning competitions for last 2 years. I have extensive experience with GBM, Neural Nets and Deep Learning as well as with development of CAD programs in different programming languages (C/C++, Python, TCL/TK, PHP etc).

Summary of approach:

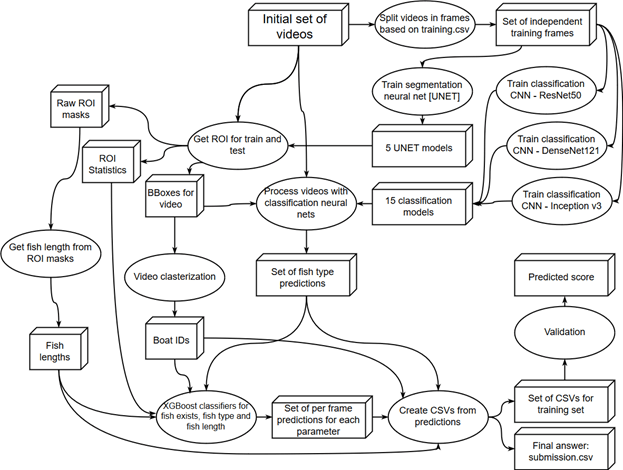

Stage 1: All videos are split into separate frames, for which we have data in training.csv. Also for each such frame a rectangular mask is generated in the place where the fish is located.

Example:

|

|

Stage 2: These initial data then used to train the network with the UNET basic structure.

Stage 3: Process all videos from train and test sets with UNET models.

Stage 4: Use bounding boxes obtained in the previous step to determine the type of fish, using transfer learning.

Stage 5: There were only 4 different boats in the training and test sets, so I decided to assign «Boat ID» to each video.

Stage 6: Having the trained models for classification and the bounding boxes with position of the fish, we can predict the type of fish or its absence.

Stage 7: To find the length of fish, simply the region of interest (ROI) predictions from UNET were used. The probabilities were cut off by some threshold (in my case 70/255 and 200/255). Bounding box was found for binary mask after cut-off. And depending on the Boat ID, either the height or the width or the diagonal of the bounding box was selected as length value.

Stage 8: 3 different GBM models were prepared: - model to determine whether or not there is fish in the given frame - model to determine the type of fish - model to determine the length of the fish (not used in the final submission)

Stage 9: On the basis of obtained data I needed to create answer in submission format.

Stage 10: All CSV files for test data were merged in single CSV file

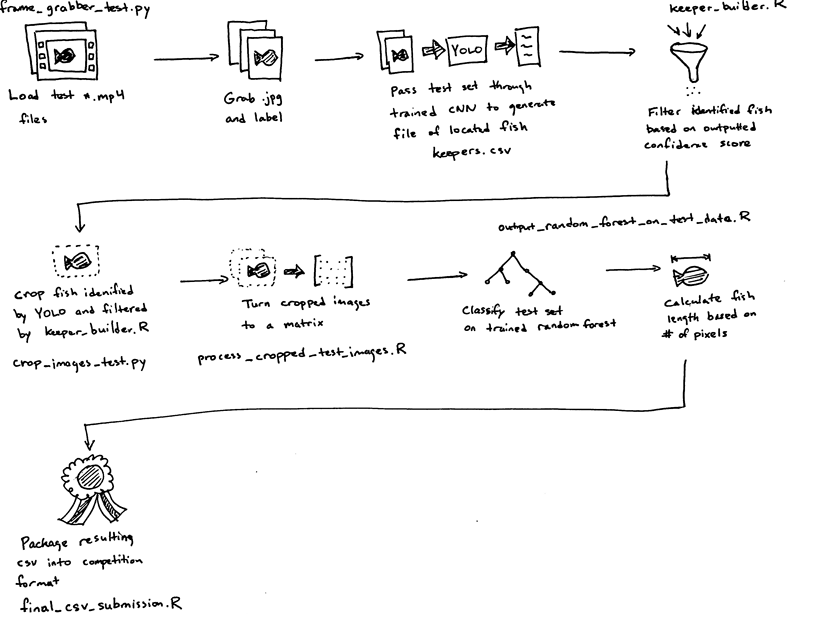

Full dataflow in one image:

Check out ZFTurbo’s full write-up and solution in the competition repo.

Daniel Fernandez¶

Place: 3rd

Prize: $10,000

Hometown: Devon, England

Username: Daniel_FG

Background: I am Building Services Design Engineer, specialized in bioclimatic design and energy efficiency. I am from Spain but currently I am living in Devon, England. Three years ago I started learning about Machine Learning & Artificial Intelligence, and I have been involved in Data Science Competitions during that time. Now I am looking for new job opportunities in these areas.

Summary of approach:

Region Of Interest (ROI): I trained a typical UNET with some modifications to detect the ROI for each video. The inputs are 16 random frames of a video file, scaled to 352x192 pixels and transformed to B&W. The target is the addition of all annotations (lines representing the fish length) of that video file. The NN predict the line along the fishes are located so I extracted a patch of size 448x224 pixels around it. This training stage could be omitted because the test set has the same boats than the training set and camera has a fixed location in all cases.

Fish sequence: To detect when a given fish was removed from the ROI I trained a second layer Extra Trees model using time-series features from first layer predictions of several NN models:

- CNN trained to detect whether there is not fish in the ROI area.

- CNN trained to select the best frame to classify the species (the frame that have annotations).

- CNN trained to detect the 4th frame after the frame with annotations.

Fish bounding box: I found out that classification models have better performance using bounding boxes instead of the previous ROI. To extract the bounding box for each fish, I train another UNET predicting the line representing the fish length and extracting a square box of that dimension. It wasn't very accurate, but better than the whole ROI area.

Fish species classification: I trained a second layer Extra Trees model using time series features from first layer predictions of two NN architectures: fine tune of pre-trained models VGG-16 and VGG-11 (https://arxiv.org/abs/1409.1556).

Fish length: I trained a second layer Extra Trees model using time-series features from first layer predictions of the same CNN architecture but changing inputs (RGB or B&W) and loss function (MSE or MAE).

Check out Daniel_FG’s full write-up and solution in the competition repo.

Daniil O.¶

Place: 4th

Prize: $3,000

Hometown: Nizhny Novgorod, Russia

Username: harshml

Background: I’m a free research scientist in computer vision, master of applied maths and computer science.

Summary of approach:

To find sequences of frames which potentially contain a fish, being placed on a ruler, a recurrent network were designed. On such sequences for each frame the 8 class (7 species + background) classifier is applied to the whole frame (global) as well as another 8 class classifier is applied to the fish region only (local). Fish region is obtained as the most confident detection region provided by the Single Shot Multi Box detector (SSD). Sequences with potential fish, given by recurrent network, can be discarded if fused species score from global and local classifiers is too low. The sequences which passed this check treated as sequences with unique fish, thus received the unique fish id. Species are obtained from local classifier scores, and length is calculated from SSD region.

Check out harshml’s full write-up and solution in the competition repo.

Cecil Rivers¶

Place: Judges’ Choice Award

A separate award was created for innovative approaches to solving this problem, even if they did not score in the Top 4. Judges were looking for inventive, novel solutions that might be incorporated into video review programs.

Prize: $2,000

Hometown: Hartford, Connecticut

Username: Kingseso

Background: Cecil Rivers is a technical wizard using his electrical engineering skills to make safety products in the electrical distribution industry. Cecil has worked over 18 years in product development where he is the inventor on 29 US patents.

Cecil has always had an interest in artificial intelligence, data analysis and machine learning, so he took up the practice of these disciplines as a hobby through the study of free online courses, open source development tools and machine learning competitions. Cecil also has a passion for getting young students interested in coding, robotics and math through classroom demonstrations and presentations. Cecil resides in Connecticut with his lovely wife and two energetic boys.

Summary of approach:

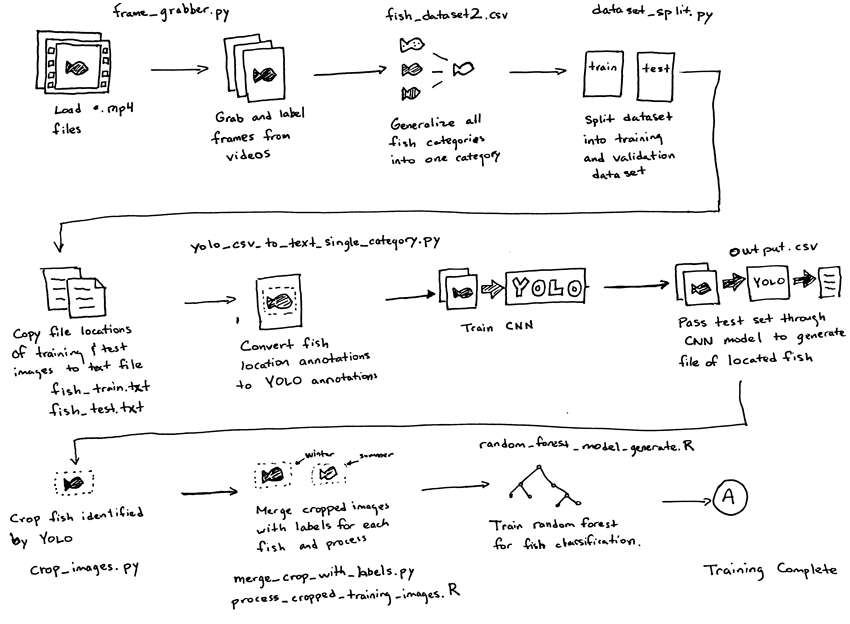

There are several main steps to generate a model that can predict and track the fish.

Training

Frame Grabber: Grabs all the frames in each video and saves them as jpeg files Generalize training csv file from various species to just a single fish Split dataset into training and validation Train YOLO Pass test data through YOLO model and generate annotations for the detected fish Crop training dataset images using identified annotations Flatten the cropped image and place in a matrix for all cropped images Add fish species for each cropped image Generate random forest model from cropped images pixels with fish species

The main part of the training is YOLO. YOLO is an object detection program that uses a single neural network on a full image. The network divides the image into regions and predicts bounding boxes and probability for each region. The bounding boxes are weighted by the predicted probabilities. Since the training dataset has annotations around for each fish, YOLO is ideal because it utilizes the annotations during the training process.

Prediction

After the random forest model has been created, the test video dataset is ready to be evaluated. Just like the training set, a similar set of frame capturing and cropping is performed. Below are the highlights of the processing of the test dataset videos:

Frame Grabber: Grabs all the frames in each video and saves them as jpeg files Pass test data through YOLO model and generate annotations for the detected fish Crop test dataset images using identified annotations Flatten the cropped image and place in a matrix for all cropped images Pass the cropped images through the random forest model from the cropped images matrix to generate the probability of the fish species. Calculate fish length using the cropped images’ dimensions

Diagrams of the approach:

Check out Kingseso’s full write-up and solution in the competition repo.

Thanks to all the participants and to our winners! Special thanks to The Nature Conservancy and Gulf of Maine Research Institute for putting together the data for this challenge, and to Kingfisher Foundation, National Fish and Wildlife Foundation, and Walton Family Foundation for funding the $50,000 prize pool. Thanks also go to the core project team: project manager Kate Wing, The Nature Conservancy’s Chris McGuire, engineer Ben Woodward and computer scientist Joseph Paul Cohen.